AWS Glue: 7 Powerful Features You Must Know in 2024

Ever felt overwhelmed by messy data scattered across systems? AWS Glue is your ultimate solution—a fully managed ETL service that simplifies data integration with zero infrastructure hassles. Let’s dive into how it transforms raw data into gold.

What Is AWS Glue and Why It Matters

AWS Glue is a fully managed extract, transform, and load (ETL) service that automates the process of preparing and loading data for analytics. It’s designed to handle the heavy lifting of data integration, making it easier for developers, data engineers, and analysts to move data between different data stores.

Core Definition and Purpose

At its heart, AWS Glue is built to solve one of the biggest challenges in data engineering: ETL. Extracting data from various sources, transforming it into a usable format, and loading it into a destination system (like a data warehouse or data lake) is traditionally time-consuming and complex. AWS Glue automates much of this workflow.

- Eliminates the need to manually write ETL scripts from scratch.

- Integrates seamlessly with other AWS services like Amazon S3, Redshift, RDS, and DynamoDB.

- Supports both structured and semi-structured data formats including JSON, CSV, Parquet, and ORC.

According to AWS’s official documentation, Glue “makes it easy to prepare and load data for analytics.” This simplicity is what makes it a go-to tool for modern data pipelines.

How AWS Glue Fits Into the Modern Data Stack

In today’s data-driven world, organizations collect data from multiple sources—web apps, IoT devices, CRM systems, and more. This data often lives in silos, making analysis difficult. AWS Glue acts as the connective tissue, enabling a unified view of enterprise data.

- Acts as a central hub in a data lake architecture on Amazon S3.

- Enables data cataloging and schema discovery through its Data Catalog.

- Supports real-time and batch processing workflows.

“AWS Glue reduces the time it takes to build ETL jobs from weeks to minutes.” — AWS Customer Testimonial

Key Components of AWS Glue

To truly understand how AWS Glue works, you need to explore its core components. Each plays a distinct role in the ETL lifecycle, from discovery to execution.

AWS Glue Data Catalog

The Data Catalog is a persistent metadata store that acts like a centralized repository for table definitions, schemas, and data locations. Think of it as a dynamic inventory of your data assets.

- Stores metadata in a format compatible with Apache Hive Metastore, enabling interoperability with tools like Amazon Athena and EMR.

- Automatically populated by Glue Crawlers, which scan data sources and infer schema.

- Supports tagging and partitioning for better organization and query performance.

For example, if you have customer data in CSV files stored in S3, a crawler can detect the columns (name, email, purchase_date), data types, and file location, then register them as a table in the Data Catalog.

Glue Crawlers and Classifiers

Crawlers are automated agents that traverse your data stores (S3, RDS, JDBC sources) and extract schema information. They use classifiers to determine the data format—whether it’s JSON, CSV, XML, or custom logs.

- Run on a schedule or triggered manually.

- Can update existing tables or create new ones in the Data Catalog.

- Support custom classifiers using Grok patterns for unstructured log data.

For instance, a crawler can detect that a folder contains Apache web server logs and apply a built-in classifier to parse fields like IP address, timestamp, and HTTP status code.

Glue ETL Jobs

ETL Jobs are the workhorses of AWS Glue. These are scripts (written in Python or Scala) that define how data should be transformed and moved. Glue provides a visual editor and auto-generates boilerplate code, significantly reducing development time.

- Run on a fully managed Apache Spark environment—no cluster management needed.

- Support incremental processing using job bookmarks to avoid reprocessing old data.

- Can be triggered by events (e.g., new file in S3) via AWS Lambda or EventBridge.

You can write a job to clean customer data, join it with order history, and load the result into Redshift for BI reporting—all without managing servers.

How AWS Glue Works: Step-by-Step Process

Understanding the workflow of AWS Glue helps demystify how it automates ETL. The process is intuitive and follows a logical sequence from data discovery to job execution.

Step 1: Setting Up Data Sources

Before any ETL can happen, you need to define where your data lives. AWS Glue supports a wide range of sources:

- Amazon S3 (most common for data lakes)

- Amazon RDS (MySQL, PostgreSQL, Oracle, SQL Server)

- Aurora, DynamoDB, Redshift, and external JDBC databases

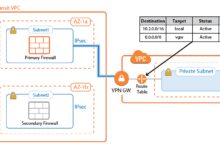

You specify connection details like VPC, subnet, security groups, and credentials (stored securely in AWS Secrets Manager or IAM roles).

Step 2: Running a Crawler to Populate the Data Catalog

Once sources are defined, you create a crawler. The crawler connects to the source, scans the data, and infers the schema. It then creates or updates a table in the Glue Data Catalog.

- You can assign multiple crawlers to different folders or databases.

- Crawlers can run on a schedule (daily, hourly) to capture schema changes.

- Supports partition detection—e.g., files organized by year/month/day.

For example, if your S3 bucket has a path like s3://my-data/customers/year=2023/month=12/, the crawler will detect year and month as partition keys, improving query efficiency later.

Step 3: Creating and Running ETL Jobs

With metadata in place, you can now create an ETL job. AWS Glue Studio offers a drag-and-drop interface, while advanced users can code directly in the Glue console.

- Select source and target tables from the Data Catalog.

- Apply transformations like filtering, joining, aggregating, or applying custom logic.

- Choose the script language (Python PySpark or Scala Spark).

The generated script uses the Glue ETL library, which extends Apache Spark with helper methods like DynamicFrame, making data handling easier than raw Spark.

Step 4: Monitoring and Logging

After job execution, monitoring is crucial. AWS Glue integrates with CloudWatch for logs and metrics.

- View real-time logs in CloudWatch Logs.

- Track job duration, memory usage, and error rates.

- Set up alarms for job failures using CloudWatch Alarms.

You can also use AWS Glue’s built-in job runs dashboard to see success/failure history and retry failed jobs.

Advantages of Using AWS Glue Over Traditional ETL Tools

Compared to on-premise ETL tools like Informatica or Talend, AWS Glue offers several compelling advantages, especially for cloud-native environments.

Fully Managed Infrastructure

One of the biggest pain points in traditional ETL is managing servers, clusters, and dependencies. AWS Glue eliminates this by providing a serverless architecture.

- No need to provision or scale EC2 instances.

- Glue automatically allocates the right amount of DPU (Data Processing Units) based on job complexity.

- Patches, updates, and maintenance are handled by AWS.

This reduces operational overhead and allows teams to focus on data logic rather than infrastructure.

Seamless Integration with AWS Ecosystem

AWS Glue isn’t an isolated tool—it’s deeply integrated with other AWS services, creating a powerful data ecosystem.

- Use Amazon Athena to query data cataloged by Glue.

- Feed transformed data into Amazon Redshift for analytics.

- Trigger Glue jobs from S3 event notifications or AWS Lambda.

For example, when a new sales file lands in S3, an S3 event triggers a Lambda function, which starts a Glue job to process and load the data into a Redshift cluster.

Cost-Effectiveness and Pay-as-You-Go Model

Traditional ETL tools often require upfront licensing costs and dedicated hardware. AWS Glue follows a pay-per-use model.

- You only pay for the DPU-hours consumed during job execution.

- No charges when jobs aren’t running.

- Costs are predictable and can be monitored via AWS Cost Explorer.

For startups and mid-sized companies, this makes Glue a financially scalable option.

Common Use Cases for AWS Glue

AWS Glue is versatile and can be applied across industries and data scenarios. Here are some of the most impactful use cases.

Data Lake Construction and Management

Building a data lake on Amazon S3 is a common goal for enterprises. AWS Glue plays a central role in ingesting, cataloging, and transforming raw data.

- Ingest data from on-premise databases, SaaS apps, and logs.

- Apply schema enforcement and data quality checks.

- Organize data into curated zones (raw, cleaned, aggregated).

Once structured, data can be queried using Athena or visualized in QuickSight.

Real-Time Data Pipelines with Glue Streaming

While Glue is known for batch processing, it also supports streaming ETL via Apache Spark Streaming.

- Process data from Amazon Kinesis or MSK (Managed Streaming for Kafka).

- Apply transformations in near real-time (e.g., fraud detection, IoT telemetry).

- Load results into dashboards or alerting systems.

This capability bridges the gap between traditional batch ETL and real-time analytics.

Migration to Cloud Data Warehouses

Many organizations are migrating from on-premise data warehouses to cloud solutions like Amazon Redshift or Snowflake. AWS Glue simplifies this transition.

- Extract data from legacy systems (Oracle, Teradata).

- Transform schemas to fit cloud warehouse models (star/snowflake).

- Load data incrementally to minimize downtime.

Glue’s job bookmarks ensure only new or changed data is processed during each run.

Best Practices for Optimizing AWS Glue Performance

To get the most out of AWS Glue, follow these proven best practices that improve speed, reduce cost, and enhance reliability.

Use Job Bookmarks to Avoid Duplicate Processing

Job bookmarks track the state of data processing, ensuring that only new or modified data is processed in subsequent runs.

- Enable bookmarks in job settings to skip already-processed files.

- Supports incremental loads from S3, DynamoDB, and JDBC sources.

- Reduces execution time and DPU consumption.

For example, if you receive daily sales files, a bookmarked job will only process the latest file instead of scanning all historical data.

Optimize DPU Allocation and Partitioning

DPU (Data Processing Unit) determines the compute power allocated to your job. Choosing the right DPU count is crucial.

- Start with the default (2–10 DPUs) and monitor performance.

- Increase DPUs for large datasets or complex transformations.

- Use partitioning in S3 to enable parallel processing and reduce I/O.

Also, consider using repartition() or coalesce() in your Spark code to optimize data distribution.

Leverage Glue Elastic Views for Real-Time Materialized Views

Glue Elastic Views allow you to create materialized views that combine data from multiple sources without writing ETL jobs.

- Use SQL to define how data from DynamoDB, RDS, and Kafka should be joined.

- Views are updated in near real-time as source data changes.

- Ideal for creating unified customer profiles or operational dashboards.

This feature reduces the need for complex streaming ETL pipelines.

Challenges and Limitations of AWS Glue

While AWS Glue is powerful, it’s not without limitations. Being aware of these helps in planning and mitigating risks.

Learning Curve for Spark and Python

Although Glue auto-generates code, effective customization requires knowledge of PySpark or Scala and Apache Spark concepts.

- New users may struggle with transformations like

map(),filter(), andjoin(). - Debugging distributed Spark jobs can be complex.

- Performance tuning requires understanding of partitioning and shuffling.

Investing in training or using Glue Studio’s visual interface can ease this transition.

Cold Start Latency

Since Glue is serverless, jobs have a cold start delay—typically 2–5 minutes—while the Spark environment initializes.

- Not ideal for sub-second latency requirements.

- Can be mitigated by using Glue Continuous ETL (beta) or keeping jobs warm.

- For real-time needs, consider Kinesis Data Analytics instead.

This delay is acceptable for most batch workloads but can be a bottleneck for time-sensitive pipelines.

Cost Management for Long-Running Jobs

While pay-per-use is cost-effective, long-running or inefficient jobs can become expensive.

- Monitor DPU-hours in AWS Cost Explorer.

- Set job timeouts to prevent runaway executions.

- Use smaller DPUs for lightweight jobs to save costs.

Regularly review job logs and optimize code to reduce execution time.

Future of AWS Glue: Trends and Innovations

AWS is continuously enhancing Glue with new features that align with modern data trends.

Integration with Machine Learning and AI

AWS Glue now supports integration with Amazon SageMaker and AWS Lake Formation for intelligent data preparation.

- Use ML transforms to deduplicate records or classify text.

- Lake Formation provides fine-grained access control over Glue Data Catalog.

- Glue DataBrew (a visual data preparation tool) complements Glue for non-coders.

These integrations make Glue a key player in AI-driven data pipelines.

Serverless Spark and Focused Compute Options

AWS Glue now offers serverless Spark endpoints, allowing interactive querying and job development without provisioning.

- Glue Studio notebooks let you write and test code in real-time.

- Supports interactive sessions for data exploration.

- Reduces time-to-insight for data engineers.

This shift toward interactivity makes Glue more developer-friendly.

Enhanced Support for Open Table Formats

With the rise of open data lakehouse architectures, AWS Glue now supports Apache Iceberg, Delta Lake, and Apache Hudi.

- Enables time travel, ACID transactions, and schema evolution.

- Improves compatibility with third-party tools like Databricks and Trino.

- Future-proofs your data architecture.

This positions AWS Glue as a leader in the open data movement.

What is AWS Glue used for?

AWS Glue is used for automating ETL (extract, transform, load) processes. It helps discover, prepare, and move data between various sources and targets, such as S3, Redshift, and RDS, making it ideal for data lakes and analytics.

Is AWS Glue serverless?

Yes, AWS Glue is a fully serverless service. It automatically provisions and scales the necessary infrastructure (based on DPUs) to run ETL jobs, so you don’t have to manage servers or clusters.

How much does AWS Glue cost?

AWS Glue pricing is based on DPU (Data Processing Unit) hours. For ETL jobs, it’s $0.44 per DPU-hour. Crawlers cost $0.10 per hour, and the Data Catalog is free. Costs vary based on job duration and complexity.

Can AWS Glue handle real-time data?

Yes, AWS Glue supports streaming ETL using Apache Spark Streaming. It can process data from Kinesis or MSK in near real-time, enabling use cases like live analytics and event-driven processing.

How does AWS Glue compare to Lambda for ETL?

While Lambda is great for lightweight, event-driven tasks, AWS Glue is designed for complex, large-scale ETL workloads using Spark. Glue handles data transformation at scale with built-in support for Spark libraries, whereas Lambda has memory and timeout limits.

In summary, AWS Glue is a transformative tool for modern data integration. From its intelligent crawlers and serverless architecture to its deep AWS integration and support for open data formats, it empowers organizations to build scalable, efficient data pipelines. Whether you’re building a data lake, migrating to the cloud, or enabling real-time analytics, AWS Glue provides the tools you need—without the infrastructure headaches. As data continues to grow in volume and complexity, AWS Glue remains at the forefront of simplifying how we harness its value.

Recommended for you 👇

Further Reading: