AWS Bedrock: 7 Powerful Features You Must Know in 2024

Imagine building cutting-edge AI applications without wrestling with complex infrastructure. That’s exactly what AWS Bedrock promises—a seamless, serverless way to harness foundation models and transform your business. Let’s dive into how it’s reshaping the AI landscape.

What Is AWS Bedrock and Why It Matters

AWS Bedrock is Amazon Web Services’ fully managed service that makes it easier for developers and enterprises to build, train, and deploy generative artificial intelligence (AI) applications using foundation models (FMs). It acts as a bridge between powerful pre-trained models and practical business applications, eliminating the need for deep machine learning expertise or costly infrastructure setup.

Launched in 2023, AWS Bedrock provides access to a growing list of state-of-the-art foundation models from leading AI companies such as Anthropic, Meta, AI21 Labs, Cohere, and Amazon’s own Titan models. These models can be used for a wide range of tasks including natural language processing, code generation, image creation, and more—all through a simple API interface.

One of the key advantages of AWS Bedrock is its integration within the broader AWS ecosystem. This means users benefit from existing security, compliance, scalability, and monitoring tools already available in AWS, such as IAM roles, VPCs, CloudTrail, and CloudWatch. This tight integration reduces operational overhead and accelerates time-to-market for AI-powered solutions.

Core Purpose of AWS Bedrock

The primary goal of AWS Bedrock is to democratize access to generative AI. By offering a no-server, pay-as-you-go model, it lowers the barrier to entry for startups, mid-sized companies, and large enterprises alike. Instead of investing millions in GPU clusters and hiring PhD-level researchers, organizations can start experimenting and deploying AI models with just a few API calls.

- Enables rapid prototyping of generative AI applications

- Supports customization via fine-tuning and Retrieval Augmented Generation (RAG)

- Ensures data privacy by keeping customer data within AWS infrastructure

This makes AWS Bedrock not just a technological tool, but a strategic enabler for innovation across industries—from healthcare and finance to media and customer service.

How AWS Bedrock Fits Into the AI Ecosystem

In the broader AI ecosystem, AWS Bedrock sits between raw model providers (like Hugging Face or open-source repositories) and end-user applications (like chatbots or content generators). It abstracts away the complexity of model hosting, scaling, and inference optimization, allowing developers to focus on application logic rather than infrastructure management.

Compared to alternatives like Google’s Vertex AI or Microsoft’s Azure AI Studio, AWS Bedrock emphasizes tight integration with AWS services, enterprise-grade security, and a curated selection of high-performing models. For organizations already invested in AWS, this creates a compelling value proposition.

“AWS Bedrock allows enterprises to innovate faster with generative AI while maintaining control over their data and infrastructure.” — AWS Executive Team

Key Features of AWS Bedrock That Set It Apart

AWS Bedrock isn’t just another API wrapper around large language models. It offers a suite of advanced features designed to make generative AI both accessible and production-ready. These capabilities go beyond simple text generation and enable enterprises to build robust, scalable, and secure AI applications.

From model customization to enterprise security, AWS Bedrock delivers a comprehensive toolkit for developers and architects. Let’s explore the standout features that make it a leader in the generative AI platform space.

Serverless Architecture and Auto-Scaling

One of the most significant advantages of AWS Bedrock is its serverless nature. Users don’t need to provision or manage any underlying infrastructure. When you invoke a model via the API, AWS automatically handles compute allocation, load balancing, and scaling based on demand.

This means you can handle sudden spikes in traffic—like during a product launch or marketing campaign—without worrying about server crashes or capacity planning. You only pay for the tokens processed, making it cost-efficient for both small experiments and large-scale deployments.

- No need to manage EC2 instances or container orchestration

- Automatic scaling from zero to thousands of requests per second

- Pay-per-use pricing model aligns costs with actual usage

This serverless approach significantly reduces operational complexity and allows teams to focus on building features instead of managing servers.

Access to Multiple Foundation Models

AWS Bedrock doesn’t lock you into a single model provider. Instead, it offers a marketplace-like experience where you can choose from a variety of foundation models, each suited for different use cases:

- Amazon Titan: Optimized for summarization, classification, and embedding tasks

- Claude by Anthropic: Known for strong reasoning, safety, and long-context understanding

- Meta’s Llama 2 and Llama 3: Open-source models ideal for code generation and conversational AI

- Jurassic-2 by AI21 Labs: Excels in creative writing and structured text generation

- Command by Cohere: Designed for enterprise search, summarization, and classification

This flexibility allows developers to test and compare models side-by-side, selecting the best fit for their specific application. For example, you might use Claude for customer support chatbots and Llama for internal code assistants.

More details on supported models can be found at AWS Bedrock Official Page.

Model Customization with Fine-Tuning and RAG

While pre-trained models are powerful, they often lack domain-specific knowledge. AWS Bedrock addresses this with two key customization techniques: fine-tuning and Retrieval Augmented Generation (RAG).

Fine-tuning allows you to adapt a base model using your own data. For instance, a financial institution could fine-tune a model on regulatory documents to improve compliance accuracy. AWS Bedrock supports fine-tuning for select models like Titan and Jurassic-2, ensuring your AI understands your industry’s jargon and context.

RAG, on the other hand, enhances model responses by pulling in real-time data from your knowledge base (e.g., Amazon OpenSearch, S3, or RDS). This is ideal for dynamic environments where information changes frequently, such as customer service or technical support.

- Fine-tuning improves model accuracy on domain-specific tasks

- RAG enables up-to-date, factually accurate responses without retraining

- Both methods preserve data privacy by keeping sensitive data within your VPC

Together, these tools allow businesses to move beyond generic AI responses and deliver personalized, context-aware experiences.

How AWS Bedrock Enables Enterprise-Grade AI Security

For enterprises, adopting AI isn’t just about performance—it’s about trust. AWS Bedrock is built with enterprise security at its core, ensuring that sensitive data remains protected throughout the AI lifecycle. This is critical for industries like healthcare, finance, and government, where compliance and data sovereignty are non-negotiable.

From encryption to access control, AWS Bedrock leverages the full power of AWS’s security framework to provide a secure foundation for generative AI applications.

Data Encryption and Privacy Controls

All data transmitted to and from AWS Bedrock is encrypted in transit using TLS 1.2+. At rest, customer data—including prompts, responses, and fine-tuning datasets—is encrypted using AWS Key Management Service (KMS), giving organizations full control over encryption keys.

Importantly, AWS does not use customer data to train its foundation models. This means your proprietary information, customer conversations, or internal documents remain confidential and are not shared with third parties.

- End-to-end encryption ensures data integrity

- KMS integration allows customer-managed keys (CMKs)

- No data retention policy protects user privacy

This level of data protection makes AWS Bedrock one of the most trusted platforms for regulated industries.

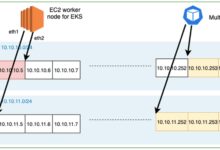

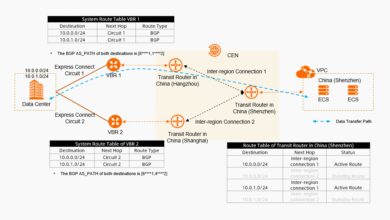

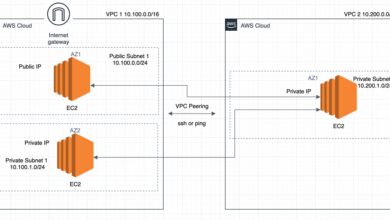

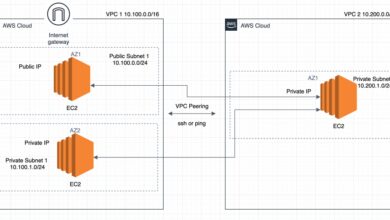

Integration with AWS IAM and VPC

AWS Bedrock integrates seamlessly with Identity and Access Management (IAM) to enforce granular access controls. You can define who can invoke which models, under what conditions, and with what level of permissions.

Additionally, Bedrock supports VPC endpoints, allowing you to call models without exposing traffic to the public internet. This is crucial for organizations that require strict network isolation and compliance with internal security policies.

- Use IAM policies to restrict model access by user or role

- VPC endpoints prevent data exfiltration via public networks

- CloudTrail logs provide audit trails for all API calls

These capabilities ensure that AI adoption doesn’t come at the cost of security or compliance.

Use Cases: Real-World Applications of AWS Bedrock

AWS Bedrock isn’t just a theoretical platform—it’s being used today by companies across industries to solve real business problems. From automating customer service to accelerating software development, the applications are vast and impactful.

Let’s explore some of the most compelling use cases where AWS Bedrock is driving innovation and efficiency.

Customer Support Automation

One of the most popular uses of AWS Bedrock is in building intelligent chatbots and virtual agents. By leveraging models like Claude or Titan, companies can create conversational AI systems that understand complex queries, maintain context over long interactions, and provide accurate, human-like responses.

For example, a telecom provider might use AWS Bedrock to power a support bot that can troubleshoot network issues, explain billing statements, or guide customers through service upgrades—all without human intervention.

- Reduces response time from hours to seconds

- Lowers operational costs by automating routine inquiries

- Improves customer satisfaction with 24/7 availability

With RAG, these bots can pull information from internal knowledge bases, ensuring responses are accurate and up-to-date.

Content Generation and Marketing

Marketing teams are using AWS Bedrock to generate high-quality content at scale. Whether it’s drafting blog posts, creating product descriptions, or personalizing email campaigns, generative AI powered by Bedrock can significantly boost productivity.

A retail brand, for instance, could use the Jurassic-2 model to automatically generate thousands of unique product titles and descriptions based on inventory data. This not only saves time but also ensures consistency in tone and style.

- Generates SEO-friendly content in minutes

- Supports multilingual content creation

- Enables A/B testing of messaging variations

When combined with Amazon Personalize, AWS Bedrock can even tailor content to individual user preferences, enhancing engagement and conversion rates.

Code Generation and Developer Productivity

Developers are leveraging AWS Bedrock—especially models like CodeLlama and Command—to accelerate coding tasks. From generating boilerplate code to debugging complex functions, AI assistance is becoming a standard part of the development workflow.

Imagine a developer typing a comment like “Create a Python function to calculate Fibonacci sequence” and having the model instantly generate the code. This kind of automation reduces cognitive load and speeds up delivery cycles.

- Auto-generates code snippets and unit tests

- Explains legacy code in plain language

- Translates code between programming languages

Integrated with AWS CodeWhisperer (which is powered by similar underlying technology), Bedrock enhances developer velocity across the SDLC.

Getting Started with AWS Bedrock: A Step-by-Step Guide

Ready to try AWS Bedrock? The onboarding process is straightforward, especially if you’re already using AWS. Here’s a practical guide to help you get started—from enabling the service to making your first API call.

Whether you’re a developer, data scientist, or architect, this walkthrough will set you on the right path to building your first generative AI application.

Enabling AWS Bedrock in Your Account

The first step is to enable AWS Bedrock in your AWS account. While the service is available in multiple regions, it may not be enabled by default due to its preview or limited availability status in certain areas.

To enable it:

- Log in to the AWS Management Console

- Navigate to the AWS Bedrock service page

- Click “Get Started” and request access to the models you want to use

- Wait for approval (some models require a review process)

Once approved, you’ll have access to the Bedrock console, SDKs, and API endpoints. You can also enable Bedrock via AWS CLI or CloudFormation for automated provisioning.

More details on access requests can be found at AWS Bedrock Setup Guide.

Using the AWS SDK to Call Models

After enabling the service, you can start invoking models using the AWS SDK. Here’s an example in Python using Boto3:

import boto3

client = boto3.client('bedrock-runtime', region_name='us-east-1')

response = client.invoke_model(

modelId='anthropic.claude-v2',

body='{"prompt":"nHuman: Explain quantum computingnnAssistant:", "max_tokens_to_sample": 300}'

)

print(response['body'].read().decode())This script sends a prompt to Claude v2 and returns a generated response. The bedrock-runtime client handles authentication, encryption, and model routing automatically.

- Supports multiple programming languages (Python, JavaScript, Java, etc.)

- Uses standard AWS authentication (IAM roles, access keys)

- Provides consistent API interface across all models

This simplicity allows developers to integrate AI into applications with minimal code changes.

Testing Models in the AWS Console

Before writing code, you can experiment with models directly in the AWS Bedrock console. The playground interface lets you type prompts, adjust parameters (like temperature and top_p), and compare outputs across different models.

This is invaluable for:

- Evaluating model quality and tone

- Testing prompt engineering strategies

- Comparing cost vs. performance trade-offs

The console also provides token usage estimates, helping you forecast costs before deployment.

Comparing AWS Bedrock with Competitors

While AWS Bedrock is a powerful platform, it’s not the only player in the generative AI space. Understanding how it stacks up against competitors like Google Vertex AI, Microsoft Azure AI Studio, and open-source alternatives can help you make informed decisions.

Each platform has its strengths, but AWS Bedrock stands out in several key areas.

AWS Bedrock vs Google Vertex AI

Google Vertex AI offers strong support for custom model training and MLOps workflows. It integrates well with TensorFlow and Google’s own PaLM models. However, it requires more infrastructure management compared to Bedrock’s fully serverless model.

Vertex AI is ideal for organizations deeply embedded in the Google Cloud ecosystem and those needing advanced model tuning. But for rapid deployment and ease of use, AWS Bedrock has the edge.

- Bedrock: Simpler, serverless, faster time-to-market

- Vertex AI: More control over training pipelines, better for ML engineers

- Both offer enterprise security and compliance

Learn more about Vertex AI at Google Cloud Vertex AI.

AWS Bedrock vs Azure AI Studio

Microsoft’s Azure AI Studio provides access to OpenAI models (like GPT-4) and integrates tightly with Microsoft 365. This makes it attractive for enterprises using Teams, Outlook, and Office apps.

However, Azure AI often requires more configuration and lacks the breadth of model choices offered by AWS Bedrock. Additionally, AWS’s global infrastructure and pricing model can be more cost-effective for large-scale deployments.

- Azure AI: Best for Microsoft-centric organizations

- Bedrock: More model options, better scalability, deeper AWS integration

- Both support RAG and fine-tuning

For companies already using AWS, Bedrock is the natural choice.

Open-Source Alternatives vs Managed Services

Some organizations opt to self-host open-source models like Llama or Mistral using frameworks like Hugging Face or vLLM. While this offers maximum control, it comes with significant operational overhead.

Managing GPUs, optimizing inference, scaling horizontally, and securing endpoints require specialized skills and ongoing maintenance. In contrast, AWS Bedrock handles all of this automatically.

- Self-hosting: Higher control, higher cost and complexity

- Managed services: Faster deployment, lower TCO, better reliability

- Hybrid approach: Use Bedrock for prototyping, self-host for production (if needed)

For most businesses, the managed approach of AWS Bedrock delivers better ROI.

Future of AWS Bedrock: Trends and Roadmap

The field of generative AI is evolving rapidly, and AWS is continuously enhancing Bedrock to stay ahead. Understanding the future direction of the platform can help organizations plan their AI strategies effectively.

From multimodal models to real-time personalization, the next wave of innovations will make AWS Bedrock even more powerful.

Expansion of Model Providers and Types

AWS is expected to onboard more model providers, including specialized AI startups and industry-specific models. We may also see increased support for multimodal models that process text, images, and audio together.

For example, a future version of Bedrock could allow you to generate a marketing video by describing it in text, with the model producing both visuals and voiceover.

- More niche models for legal, medical, or engineering domains

- Support for real-time video generation and analysis

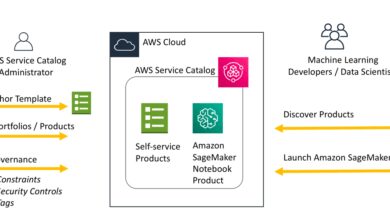

- Integration with Amazon SageMaker for hybrid workflows

This expansion will make Bedrock a one-stop platform for all generative AI needs.

Enhanced Customization and Control

Future updates are likely to include more advanced fine-tuning options, better explainability tools, and improved guardrails for responsible AI. AWS may also introduce model distillation features, allowing smaller, faster versions of large models to be deployed on edge devices.

Additionally, tighter integration with AWS AppConfig and Systems Manager could enable dynamic model switching based on user behavior or business rules.

- Dynamic model routing based on input type

- Real-time feedback loops for continuous improvement

- AI ethics dashboards for monitoring bias and fairness

These features will empower enterprises to build not just smart, but trustworthy AI systems.

Global Availability and Edge Deployment

Currently, AWS Bedrock is available in select regions. However, AWS is expected to expand its footprint to more global locations, reducing latency for international users.

There’s also potential for edge deployment via AWS Wavelength or Outposts, enabling low-latency AI inference in remote or offline environments—such as manufacturing plants or autonomous vehicles.

- Lower latency for real-time applications

- Offline AI capabilities for critical infrastructure

- Compliance with local data residency laws

This will open new use cases in IoT, defense, and healthcare.

What is AWS Bedrock used for?

AWS Bedrock is used to build and deploy generative AI applications without managing infrastructure. Common use cases include chatbots, content generation, code assistance, and data analysis—all powered by foundation models from leading AI providers.

Is AWS Bedrock free to use?

No, AWS Bedrock is not free, but it follows a pay-per-use pricing model. You pay based on the number of input and output tokens processed. There is no upfront cost or minimum fee, making it cost-effective for experimentation and scaling.

Which models are available on AWS Bedrock?

AWS Bedrock offers models from Amazon (Titan), Anthropic (Claude), Meta (Llama 2 and Llama 3), AI21 Labs (Jurassic-2), and Cohere (Command). New models are added regularly based on demand and performance.

How does AWS Bedrock ensure data privacy?

AWS Bedrock encrypts data in transit and at rest, allows customer-managed keys via KMS, and does not use customer data to train foundation models. It also supports VPC endpoints to keep traffic within private networks.

Can I fine-tune models on AWS Bedrock?

Yes, AWS Bedrock supports fine-tuning for select models like Amazon Titan and AI21 Jurassic-2. You can customize models using your own data to improve performance on specific tasks while maintaining data security.

In conclusion, AWS Bedrock is revolutionizing how businesses adopt generative AI. With its serverless architecture, broad model selection, enterprise security, and seamless AWS integration, it empowers organizations to innovate faster and smarter. Whether you’re automating customer service, generating content, or boosting developer productivity, AWS Bedrock provides the tools you need to succeed. As the platform continues to evolve with new models, features, and global reach, its role as a cornerstone of enterprise AI will only grow stronger.

Recommended for you 👇

Further Reading: