AWS Athena: 7 Powerful Insights for Data Querying Success

Ever wished you could query massive datasets without managing servers or databases? AWS Athena makes that dream a reality—fast, flexible, and built on the power of Presto. Let’s dive into how this serverless tool is reshaping cloud analytics.

What Is AWS Athena and How Does It Work?

AWS Athena is a serverless query service that allows you to analyze data directly from files stored in Amazon S3 using standard SQL. Unlike traditional data warehouses, it doesn’t require setting up or managing infrastructure. You simply point Athena to your data in S3, define a schema, and start running queries.

Serverless Architecture Explained

The term ‘serverless’ can be misleading. It doesn’t mean there are no servers—it means you don’t have to provision, scale, or maintain them. AWS handles all the backend infrastructure automatically. With AWS Athena, you’re freed from worrying about clusters, nodes, or capacity planning.

- No servers to manage or patch

- No need to install or configure software

- Automatic scaling based on query complexity and volume

This architecture is ideal for organizations looking to reduce operational overhead while maintaining high performance.

Integration with Amazon S3

AWS Athena is deeply integrated with Amazon S3, Amazon’s scalable object storage service. Your data remains in S3, and Athena reads it in-place using a process called ‘schema-on-read.’ This means the structure (schema) is applied when you query the data, not when it’s stored.

- Data stays in S3—no data movement required

- Supports various file formats: CSV, JSON, Parquet, ORC, Avro

- Enables cost-effective storage with tiered S3 classes (Standard, Glacier, etc.)

This integration reduces latency and eliminates ETL bottlenecks, making AWS Athena a go-to for real-time analytics.

Underlying Query Engine: Presto

AWS Athena is powered by Presto, an open-source distributed SQL query engine originally developed by Facebook. Presto is designed for low-latency, high-concurrency queries across large datasets.

- Executes queries in memory for fast performance

- Supports ANSI SQL, making it familiar to data analysts

- Can join data across multiple sources (e.g., S3, RDS, DynamoDB)

According to the official Presto documentation, it can process petabytes of data in seconds, which explains Athena’s speed and efficiency.

Key Features That Make AWS Athena a Game-Changer

AWS Athena stands out in the crowded cloud analytics space due to its unique combination of simplicity, scalability, and integration. Let’s explore the core features that make it a favorite among data engineers and analysts.

Fully Managed and Serverless

One of the biggest advantages of AWS Athena is that it’s fully managed. AWS handles everything from patching and updates to scaling and security. This allows teams to focus on data analysis rather than infrastructure management.

- No need to provision clusters or instances

- No downtime for maintenance or upgrades

- Automatic concurrency and resource allocation

This feature is especially valuable for startups and small teams without dedicated DevOps resources.

Pay-Per-Query Pricing Model

AWS Athena uses a pay-per-query pricing model, charging based on the amount of data scanned per query. This makes it highly cost-effective for sporadic or unpredictable workloads.

- Cost: $5 per terabyte of data scanned

- No charges when not running queries

- Cost optimization possible through data partitioning and columnar formats

For example, if your query scans 10 GB of data, you pay just $0.05. This granular billing is a major win for budget-conscious organizations.

Support for Multiple Data Formats and Sources

AWS Athena supports a wide range of data formats, including CSV, JSON, Apache Parquet, ORC, and Avro. It also integrates with AWS Glue Data Catalog, allowing you to define and reuse schemas across queries.

- Parquet and ORC offer columnar storage, reducing I/O and cost

- JSON and CSV are ideal for semi-structured data

- Integration with AWS Glue enables metadata management

Additionally, Athena can query data from other AWS services like CloudTrail logs, VPC flow logs, and application logs stored in S3.

Setting Up Your First Query in AWS Athena

Getting started with AWS Athena is straightforward. Whether you’re analyzing logs, customer data, or IoT streams, the setup process is consistent and user-friendly.

Step 1: Prepare Your Data in Amazon S3

Before querying, ensure your data is stored in an S3 bucket. Organize it logically—consider using prefixes (folders) to separate data by date, type, or source.

- Upload sample data (e.g., CSV or JSON files)

- Ensure proper IAM permissions for Athena to access the bucket

- Use S3 Lifecycle policies to move older data to cheaper storage tiers

For best performance, convert your data to columnar formats like Parquet or ORC using AWS Glue or EMR.

Step 2: Define a Table Using the AWS Glue Data Catalog

AWS Athena uses the Glue Data Catalog to store metadata about your data. You can create a table definition that maps to your S3 data.

- Go to the Athena console and open the query editor

- Run a

CREATE EXTERNAL TABLEcommand - Specify the schema (columns, data types), file format, and S3 location

Example:

CREATE EXTERNAL TABLE IF NOT EXISTS logs_table (

timestamp STRING,

ip STRING,

request STRING,

status INT

)

ROW FORMAT SERDE ‘org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe’

LOCATION ‘s3://my-bucket/logs/’

TBLPROPERTIES (‘has_encrypted_data’=’false’);

This table definition tells Athena how to interpret your data when queried.

Step 3: Run Your First SQL Query

Once the table is defined, you can run SQL queries directly in the Athena console.

- Type a simple

SELECT * FROM logs_table LIMIT 10; - Execute the query and view results in the console

- Export results to S3 in CSV, JSON, or Parquet format

You can also use tools like Amazon QuickSight, Tableau, or JDBC/ODBC drivers to connect to Athena for visualization.

Performance Optimization Techniques for AWS Athena

While AWS Athena is fast by design, performance can vary based on data structure, query complexity, and format. Applying optimization techniques can significantly reduce query time and cost.

Use Columnar File Formats (Parquet, ORC)

Storing data in columnar formats like Apache Parquet or ORC can drastically reduce the amount of data scanned during queries.

- Only relevant columns are read, minimizing I/O

- Supports advanced compression (e.g., Snappy, GZIP)

- Improves query speed and reduces costs

According to Apache Parquet’s official site, columnar storage can reduce file sizes by up to 75% compared to CSV.

Partition Your Data Strategically

Data partitioning involves organizing your S3 data into directories based on values like date, region, or category. Athena can skip entire partitions during queries, reducing scan volume.

- Example:

s3://bucket/logs/year=2023/month=09/day=15/ - Use

WHEREclauses that filter on partition keys - Leverage AWS Glue crawlers to automatically detect partitions

A well-partitioned dataset can reduce query costs by 90% or more.

Compress and Convert Data Efficiently

Compressing your data reduces storage size and the amount of data scanned during queries. Formats like GZIP, BZIP2, or Snappy work well with Athena.

- Compressed files are read efficiently by Athena

- Combine compression with columnar formats for maximum savings

- Use AWS Lambda or Glue jobs to automate conversion pipelines

For instance, converting 1 TB of uncompressed CSV to Snappy-compressed Parquet can reduce queryable data to ~200 GB, cutting costs from $5 to $1 per query.

Security and Access Control in AWS Athena

Security is paramount when dealing with sensitive data. AWS Athena integrates with AWS Identity and Access Management (IAM), AWS Key Management Service (KMS), and other security tools to ensure data protection.

IAM Policies for Fine-Grained Access

You can control who can run queries, access specific databases, or view results using IAM policies.

- Define policies that restrict access to certain S3 buckets

- Limit query execution to specific users or roles

- Use conditions to enforce MFA or IP restrictions

Example policy snippet:

{

“Effect”: “Allow”,

“Action”: [

“athena:StartQueryExecution”,

“athena:GetQueryResults”

],

“Resource”: “arn:aws:athena:us-east-1:123456789012:workgroup/primary”

}

This ensures only authorized users can interact with Athena.

Data Encryption with AWS KMS

AWS Athena supports encryption at rest using AWS KMS. Query results stored in S3 can be encrypted automatically.

- Enable encryption in the Athena workgroup settings

- Use customer-managed keys (CMKs) for greater control

- Ensure S3 buckets have default encryption enabled

This protects sensitive output and complies with regulations like GDPR or HIPAA.

Audit and Monitor with CloudTrail and CloudWatch

To maintain accountability, AWS Athena integrates with AWS CloudTrail and Amazon CloudWatch.

- CloudTrail logs all Athena API calls (e.g., query execution, table creation)

- CloudWatch metrics track query duration, data scanned, and errors

- Set up alarms for unusual activity or cost spikes

These tools help you maintain compliance and troubleshoot issues quickly.

Use Cases and Real-World Applications of AWS Athena

AWS Athena isn’t just a toy for developers—it’s a powerful tool used across industries for real business impact. Let’s explore some practical applications.

Analyzing Log Files at Scale

Organizations generate massive amounts of log data from applications, servers, and networks. AWS Athena allows you to query these logs without setting up complex pipelines.

- Analyze CloudTrail logs to audit AWS API usage

- Query VPC flow logs to detect network anomalies

- Parse application logs to identify errors or performance bottlenecks

For example, Netflix uses Athena-like systems to analyze streaming logs and optimize content delivery.

Business Intelligence and Reporting

With integration to tools like Amazon QuickSight and Tableau, AWS Athena serves as a backend for dynamic dashboards and reports.

- Connect BI tools via JDBC/ODBC drivers

- Run ad-hoc queries for sales, marketing, or finance teams

- Combine data from multiple S3 sources for unified reporting

Companies like Airbnb use similar architectures to power real-time analytics for decision-makers.

Data Lake Querying and Exploration

AWS Athena is a cornerstone of modern data lake architectures. It enables self-service analytics on raw, unstructured, or semi-structured data.

- Explore data before building ETL pipelines

- Join datasets from different departments (e.g., sales + support)

- Support data science teams with SQL access to raw data

According to AWS customer case studies, enterprises like FINRA use Athena to query petabytes of financial data for compliance.

Limitations and Challenges of AWS Athena

While AWS Athena offers many advantages, it’s not a one-size-fits-all solution. Understanding its limitations helps you design better architectures.

No Support for Real-Time Streaming Queries

AWS Athena is designed for batch querying, not real-time analytics. It has a latency of a few seconds to minutes, making it unsuitable for streaming use cases.

- Not ideal for dashboards requiring sub-second refresh

- Consider Amazon Kinesis Data Analytics for real-time needs

- Use caching layers (e.g., Amazon Redshift, Redis) for faster access

For true real-time, pair Athena with other AWS services in a hybrid setup.

Performance Depends on Data Organization

Athena’s speed is highly dependent on how your data is stored. Poorly structured or unpartitioned data can lead to slow, expensive queries.

- Uncompressed CSV files can be 10x slower than Parquet

- Queries scanning terabytes of data may take minutes

- Requires upfront planning for optimal performance

Invest time in data modeling and transformation to avoid performance pitfalls.

Cost Can Spiral Without Optimization

While pay-per-query sounds cheap, costs can add up quickly if queries scan large datasets inefficiently.

- Unfiltered queries on 1 TB of data cost $5 each

- Repeated queries without caching increase expenses

- Lack of query governance can lead to waste

Implement query reviews, use workgroups with enforced limits, and monitor spending via AWS Cost Explorer.

Best Practices for Maximizing AWS Athena Efficiency

To get the most out of AWS Athena, follow these proven best practices that top cloud teams use to balance performance, cost, and usability.

Organize Data with Logical Partitioning

Partitioning by time (e.g., date, hour) or category (e.g., region, product) allows Athena to skip irrelevant data during queries.

- Use Hive-style partitioning:

s3://bucket/data/year=2023/month=09/ - Update partition metadata using MSCK REPAIR TABLE or AWS Glue crawlers

- Avoid over-partitioning, which can create too many small files

This simple step can reduce query costs by 80% or more.

Leverage AWS Glue for Metadata Management

AWS Glue is a fully managed ETL service that integrates seamlessly with Athena. Use Glue crawlers to automatically infer schemas and populate the Data Catalog.

- Automate table creation from S3 data

- Supports custom classifiers for non-standard formats

- Enables schema versioning and evolution

This reduces manual effort and ensures consistency across teams.

Use Workgroups for Query Isolation and Cost Control

Workgroups in AWS Athena allow you to separate query environments (e.g., dev, prod) and enforce settings like encryption and query limits.

- Create separate workgroups for different teams

- Set data usage limits to prevent runaway costs

- Enforce output encryption and query result retention

This is crucial for enterprise governance and cost accountability.

What is AWS Athena used for?

AWS Athena is used to run SQL queries directly on data stored in Amazon S3 without needing to set up databases or servers. It’s commonly used for log analysis, business intelligence, data lake querying, and ad-hoc analytics.

Is AWS Athena free to use?

AWS Athena is not free, but it follows a pay-per-query model. You pay $5 per terabyte of data scanned. There’s no cost for storage or when not running queries, making it cost-effective for intermittent use.

How fast is AWS Athena?

Query speed in AWS Athena depends on data size, format, and complexity. Simple queries on optimized data (e.g., Parquet) can return results in seconds. Large, unoptimized queries may take minutes.

Can AWS Athena query data from other sources?

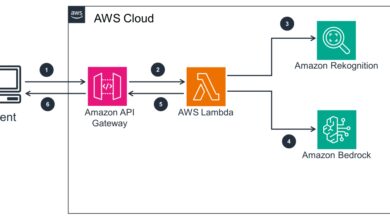

Yes, AWS Athena can query data from multiple sources using Federated Query. This includes Amazon RDS, DynamoDB, S3, and even external data stores via Lambda functions.

How does AWS Athena differ from Amazon Redshift?

AWS Athena is serverless and query-on-demand, while Amazon Redshift is a managed data warehouse requiring cluster setup. Athena is ideal for ad-hoc queries; Redshift suits high-performance, continuous analytics.

AWS Athena is a powerful, flexible tool that democratizes data access across organizations. By eliminating infrastructure management and supporting standard SQL, it enables teams to focus on insights rather than setup. While it has limitations around real-time performance and cost control, proper optimization—using columnar formats, partitioning, and Glue integration—can unlock its full potential. Whether you’re analyzing logs, building reports, or exploring a data lake, AWS Athena offers a compelling blend of simplicity and scalability. As cloud analytics evolve, Athena remains a key player in the serverless data revolution.

Recommended for you 👇

Further Reading: